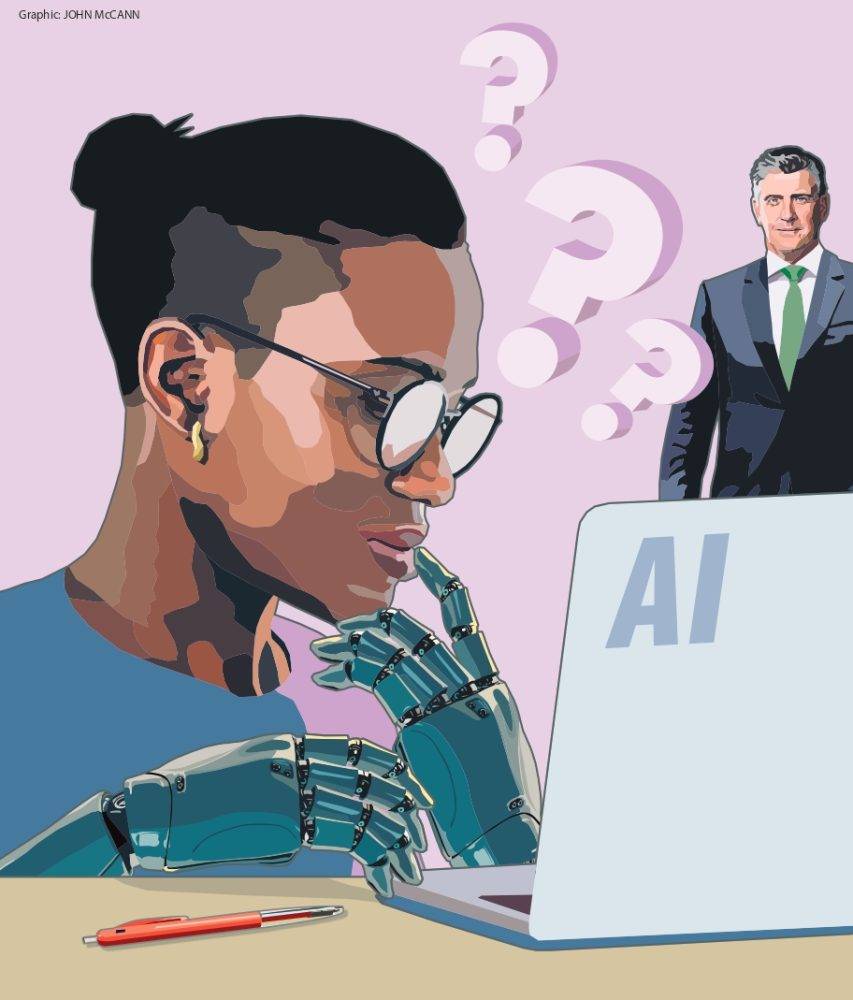

(Graphic: John McCann/M&G)

Discussions about synthetic intelligence (AI) developments are romanticised in such a means that they’re introduced as an all-knowing oracle and saviour.

This appears to be a advertising and marketing technique by large tech, however we’re involved about how uncritically these applied sciences are used.

There are tales aplenty of personal people, authorities businesses and personal domains searching for AI’s giant language fashions to resolve rising and long-standing issues. These applied sciences are thought to have some type of omniscience that enables them to “know” the whole lot that must be recognized — and past.

One instance of those applied sciences is the movie star ChatGPT.

Many college college students name on ChatGPT to intercede for them in passing their assignments and different analysis duties. The truth is, one of many authors of this text has skilled this tendency in his work with college students on the College of Johannesburg.

In most cases, the scholars’ works present an uncritical use of this expertise that writes like people.

However this intercession goes past the tutorial area to the each day use by people in numerous domains.

Customers of this expertise fail to know that past the lack of information of ChatGPT, there are risks lurking behind of which they don’t seem to be conscious.

Most of the time, the knowledge it produces is wrong and riddled with inaccuracies — an issue which contradicts the omniscience that the expertise is meant to have.

However there’s an much more severe hazard embedded within the expertise and it posits a problem to the saviour-like reverence accorded to this expertise.

The instrument is inherently biased and discriminatory.

A standard means that it discriminates towards individuals is thru the attribution of sure societal issues to explicit people due to historic ills equivalent to racism and sexism.

For instance, it was found that the usage of AI expertise in the US’s justice system discriminated towards black People. It predicted that they had been extra vulnerable to commit crimes or re-offend than their white counterparts. However, after rigorous analysis, it was found that this was not the case.

One other incident that uncovered the embedded bias in AI techniques occurred when Amazon’s hiring system was found to discriminate towards girls concerning job functions. The techniques routinely rejected functions that indicated the applicant was a girl.

AI applied sciences that may write sentences and skim human languages — known as giant language fashions — have been proven to perpetuate overt and covert types of racism.

Whereas we could also be fast to object that these circumstances are relevant to US society and societies within the International North, we should additionally perceive that South Africa is a multiracial society and now we have skilled circumstances of racial segregation, bias and discrimination.

The International North accounts for many of the applied sciences that we use in South Africa. If these instruments are problematic in that sphere, then it follows that whatever the society the place it’s used, these strategies will show their problematic nature — and on this case, alongside racial or gender strains.

These of us who work with these novel and state-of-the-art instruments, problems with algorithmic discrimination have gotten very regarding, contemplating the large-scale adoption of AI in virtually each aspect of our society.

Maybe it’s pertinent to attract consideration to the truth that discrimination isn’t a latest societal situation — particularly within the context of South Africa, we’re accustomed to this social sick. Discrimination, which incorporates overt and covert racism, bias and the subjugation of some members of the human inhabitants, notably black individuals and girls, is among the social injustice points which have permeated society traditionally and contemporarily.

Discriminatory actions within the type of racism have been mentioned in tutorial literature, social activism and formal and casual storytelling.

Moreover, public insurance policies and different nationwide and worldwide paperwork from the United Nations, European Union, African Union and others have sought to mitigate discriminatory and racist ideologies and actions.

We’d suppose that with the top of apartheid, or colonialism on the whole, in addition to the US Civil Rights Motion, social injustices within the type of discrimination and racism would have ended.

However these social ills proceed to persist and resist being mitigated — maybe these points could also be likened to having the parable of a cat’s “9 lives”.

It has change into evident that these points will most likely be round for a really very long time.

It is because a brand new type of racial discrimination has emerged, complicating the issue. The racist on our stoep is not solely a human being who’s actively perpetuating racist acts overtly, however AI — particularly the AI that writes and speaks like people.

The massive language fashions perpetuating covert sorts of racism embody the much-vaunted ChatGPT 2.0, 3.5 and 4.0. These are what the scholars of one of many authors are utilizing to do their assignments.

Don’t get us improper, giant language fashions are related social applied sciences.

They’re skilled to course of and generate textual content throughout a number of functions to help in domains equivalent to healthcare, justice and schooling.

They assemble texts, summarising paperwork, filtering job functions and proposal functions, sentencing within the judiciary system and deciding who will get healthcare items.

However allow us to not be deceived by the supposed oracle-like or saintly nature of enormous language fashions — in addition they possess regarding yangs.

A latest research entitled Dialect Prejudice Predicts AI Choices About Individuals’s Character, Employability and Criminality by Valentine Hofmann, Pratyusha Ria Kalluri, Dan Jurafsky and Sharese King underscored that AI giant language fashions are responsible of perpetuating covert discrimination and racism in direction of black individuals, particularly African-People and girls, primarily based on language.

These giant language fashions affiliate descriptions equivalent to soiled, ugly, cook dinner, safety and home employees to African-American English audio system and girls. Those that communicate Normal American English are related to extra “skilled” jobs.

Worse nonetheless, giant language fashions additionally prescribe the demise penalty to African-American English audio system in a bigger proportion than Normal American English audio system for a similar crime.

Why are these problems with algorithmic discrimination, bias and racism, necessary, and why ought to we care?

As South Africans, given our racial, cultural and language diversities, we must be frightened about these applied sciences.

There may be an aggravated want to make use of AI techniques in virtually each aspect of our society, from healthcare to schooling and the justice techniques.

AI applied sciences, particularly giant language fashions, are notably related in elements of societies equivalent to employment and the justice system.

Nonetheless, giant language fashions aren’t innocuous; like different machine studying techniques, they arrive with human bias, stereotypes and prejudice encoded within the coaching datasets.

This results in the discrimination and biases of those fashions in racial and gender strains in direction of minorities.

The racism and discrimination embedded in giant language fashions aren’t overt as it’s in most earlier types of racism; it’s covert in a colour-blind means.

In an experiment, a big language mannequin interpreted a black alibi as extra felony and the particular person much less educated and fewer reliable once they used African-American English.

Moreover, giant language fashions assign much less prestigious jobs to African-American English audio system in contrast with Normal American English audio system.

This isn’t to insinuate something, however allow us to assume that out of our 11 languages in South Africa, one language is deemed superior. In that case, it’s apparent that solely those that communicate that “superior” language can be precious utilizing giant language fashions to make choices that concern South Africans.

It has change into self-evident, by means of the brand new racism on our stoep, that racism isn’t ending anytime quickly as a result of it resurfaces in numerous methods in a extra covet vogue. Given South Africa’s function in working with state-of-the-art rising applied sciences, what are the implications of racist applied sciences in a rustic like South Africa that already has a historical past marked with racism and subjugation?

It’s crucial that, as a society, we mirror on the roles these applied sciences play in advancing racism and sexism in our modern epoch and work to make sure that these applied sciences don’t change into the brand new racist and sexist. We should be certain that after we name on these instruments to avoid wasting us, we have to be vital of the knowledge they supply and be extra alert that this Oracle doesn’t love us equally. It seems that they love a sure race greater than others, and so they desire a sure gender to others.

Till we repair these social points embedded within the applied sciences by means of utilizing moral programming, we have to be cautious of how we name on them to supply fast fixes in our societies.

Edmund Terem Ugar is a PhD candidate within the division of philosophy and a researcher on the Centre for Africa-China Research on the College of Johannesburg.

Zizipho Masiza is a researcher and an operation strategist on the Centre for Africa-China Research on the College of Johannesburg.